The universal pain of lyrics and melodies teetering on the tip of your tongue has been solved by Google.

Yesterday (15/10), Google announced their newest feature “hum to search”, which allows users to narrow down their hunt on the earworms they can’t quite remember. By humming, singing, or whistling a melody, Google can identify the song, even without the artist name, lyrics, or the perfect pitch.

Do you know that song that goes, “da na na na na do do?” We bet Google Search does. 😉 Next time a song is stuck in your head, just #HumToSearch into the Google app and we’ll identify the song. Perfect pitch not required → https://t.co/xOFYTukjOk #SearchOn pic.twitter.com/3LRN4HJMKG

— Google (@Google) October 15, 2020

Right now, the newly rolled out feature is available in English on iOS, and in more than 20 languages on Android.

Test it yourself by learning how to use it below:

- Launch the latest version of the Google app, search widget or Google Assistant.

- Tap the mic icon and say “What’s this song?” or click the “Search a song” button.

- Hum the tune for 10-15 seconds.

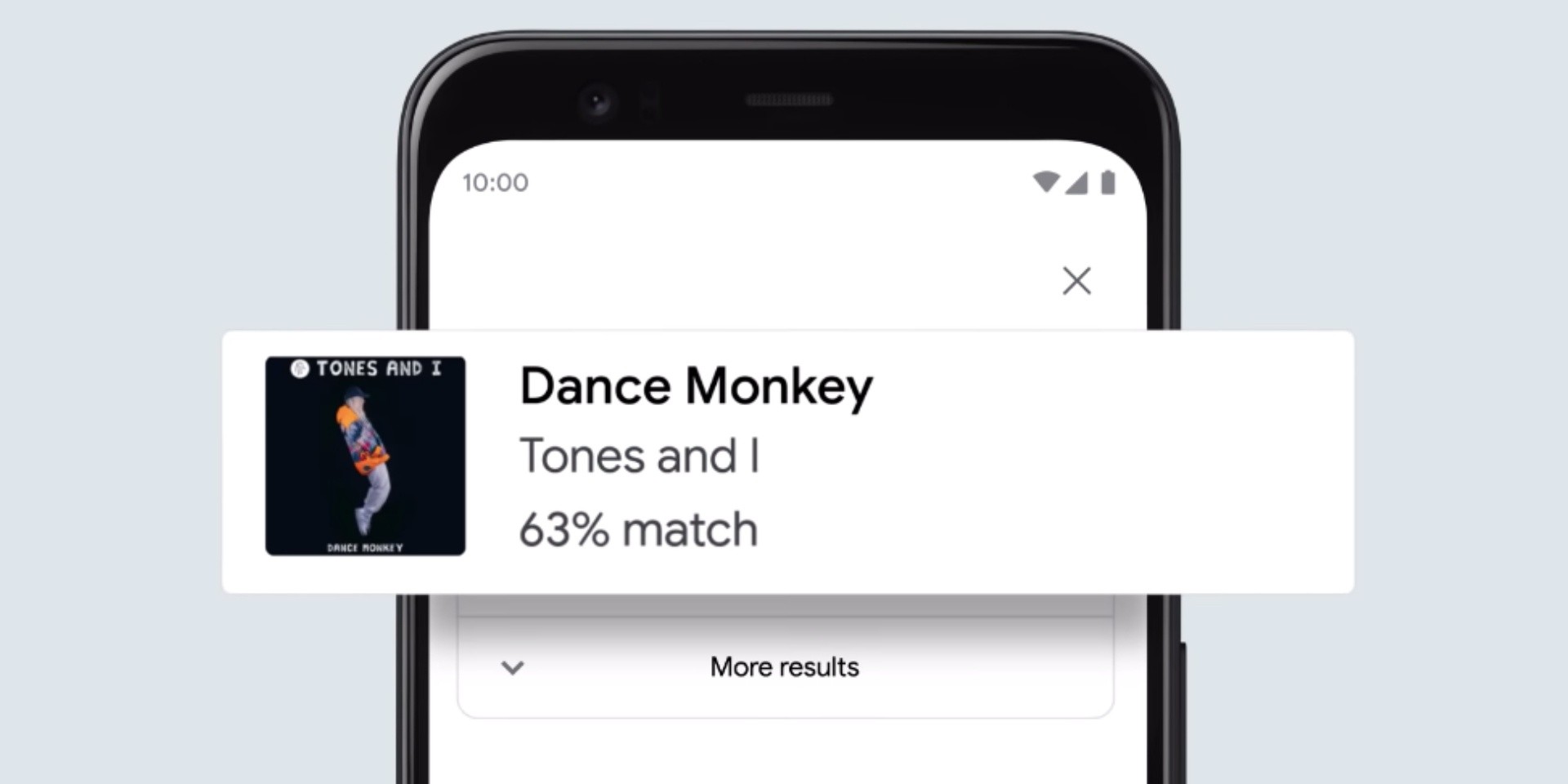

While not completely foolproof, the machine learning algorithm is said to list out potential song matches with the most likely options. Users can then explore more about the chosen track with accompanying features, such as listen to the song on various platforms, get more information on the song and artist, watch music videos, and even read lyrics and analyses.

The new feature is also a part of Search On, which comes with other functions like helping you find an exact moment in a video, passage in a webpage, and more.

Providing an explanation for what goes on behind the innovation, Krishna Kumar, Senior Project Manager at Google Search said, “An easy way to explain it is that a song’s melody is like its fingerprint: They each have their own unique identity. We've built machine learning models that can match your hum, whistle or singing to the right ‘fingerprint’.”

Diving into the details, the hummed melody is then transformed into a number-based sequence with their machine learning models, which have been trained to “identify songs based on a variety of sources”. These algorithms have the ability to strip away the instruments, timbre and tone of the voice and other details, which leaves the song’s number-based sequence crucial to tracking down the song.

Run against thousands of potential matches all over the world, results will only prove to be more accurate over time, as “our machine learning models recognise the melody of the studio-recorded version of the song, which we can use to match it with a person’s hummed audio”.

Watch a video on how to use it here, which uses Tones And I’s ‘Dance Monkey’ as an example:

Like what you read? Show our writer some love!

-